Description

Project

Inspiration

Hearing is an essential way for us to interact with the outside world. It is the most elementary communication tool for forming the societies. However, there are 360 million hearing-impaired people around the world, accounting for 5% of the world’s total population.[1]

Suddenly, the audible world comes to an end. Demonic buzzing replaced the ambient sound I have been hearing for years.

Despite this serious situation, hearing loss is still categorized as "incurable" for most of the patients.[3]

The physical causes of hearing loss are complicated. Human hearing system is a complex pipeline with both mechanical and neural components. While the mechanical part can be repaired by surgical processes, treatments for sensorineural hearing loss remain highly experimental and ineffective.[4]

One ingenious invention, namely the cochlear implant, greatly changed the landscape for the treatment. This tiny device directly converts sound into electronic pulses recognizable by the cochlear nerve endings, the structure that passes audio information to the brain. However, such device significantly increases the probability of bacterial meningitis, among other drawbacks.[5] We cannot hesitate to wonder, "What if the bulky electronics can be replaced by a layer of engineered cells?".

Research Summary

Compared to recent accomplishments in engineering cells sensing light and chemicals, namely optogenetics and chemogenetics, there is little systematic research on engineering cells with capability of sensing sound.

Other cells, such as blood vessel cells, could sense mechanical shear force. Other mechanical sensing(MS) ion channels can help us sensing mechanical stimuli such as touch.[6] We hypothesize that it is possible to use synthetic biology approaches to engineer non sensory neuron to detect different aspects of sound, such as frequency and intensity. Our project is consistent of three aims:

- Establishing systematic characterization tools to analyze the response of CHO cell to mechanical signals;

- Establishing engineered CHO cell lines with synthetic circuit containing mammalian mechanosensitive Piezo1 channel or transient receptor potential channel 5 (TRPC5), and quantitatively characterize the different mechanical response of the two cell lines;

- Developing platform for further improvement of the mechanical sensing ion channels with directed evolution. We'd like to call the ensemble of the methodology as audiogenetics.

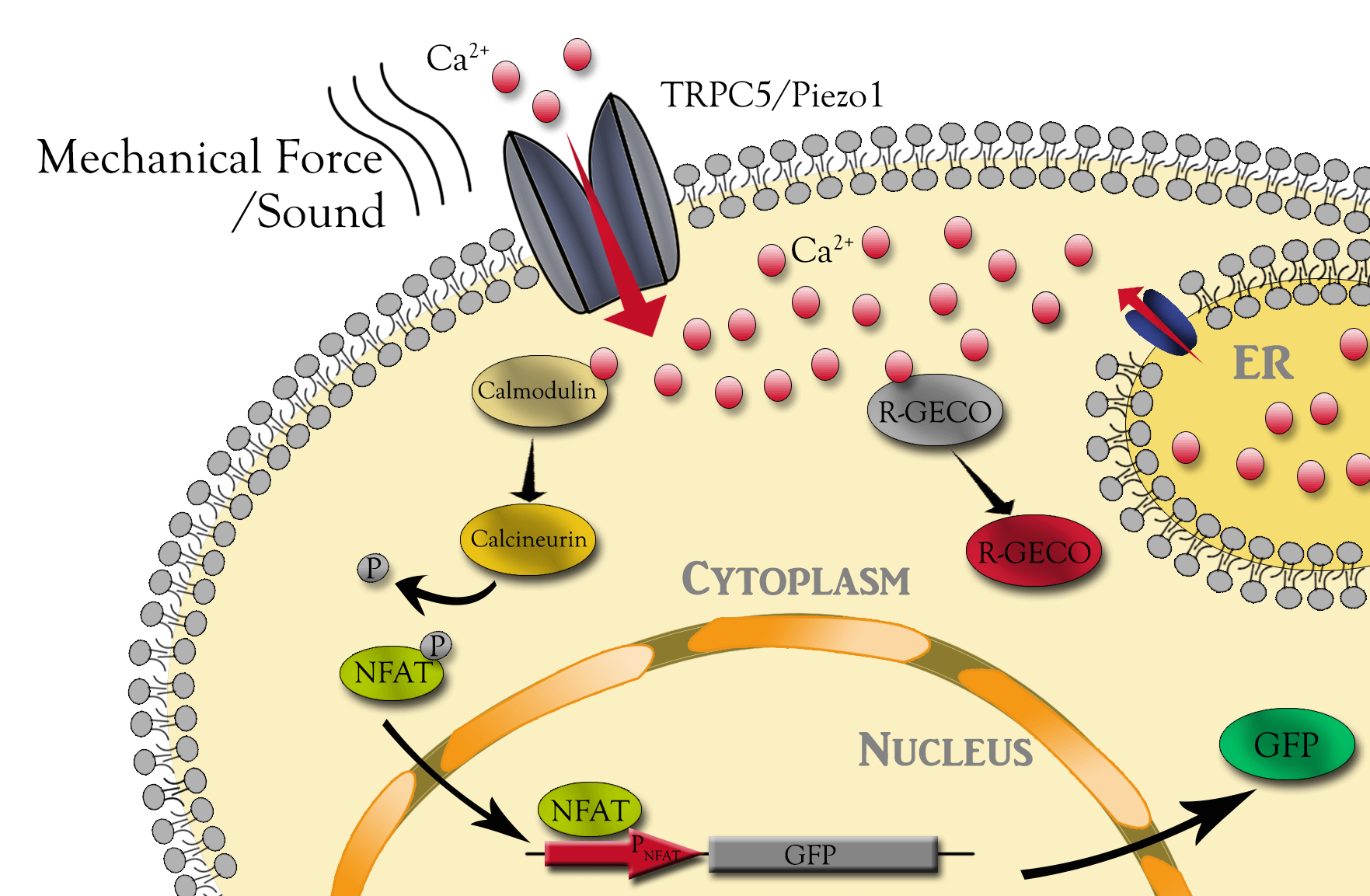

MS channels open and close in response to various mechanical stress, which in turn allows for extracellular cations, such as calcium, to enter and depolarize the cell. Although not well studied, they could also transduce the audio wave energy as the extracellular input signal into intracellular downstream signals. Can they transduce these transient stimuli into stable gene expression? To study all of these, we first stably integrate synthetic circuit including, tunable-expressing MS channels, genetic calcium sensor and calcium sensing gene expression cassette into CHO cell genome. The calcium sensor activities were measured and validated with live cell fluorescent microscopy using ionomycin as positive stimulus. The downstream NFAT promoter report was validated with chronic sound stimulation of cells expressing Piezo1.

The second question is that, can the wide type and engineered CHO cells sense mechanical stress differently? After a lot of trial-and-error, we established three mechanical stimulation systems:

- hypoosmolarity shock with transient tunable osmotic pressure;

- tunable shear force with customized microfluidics chip;

- Piezo1-driven sound stimulation cell culture chamber with tunable frequency and amplitude.

To our surprise, our data suggested that, they do sense different mechanical stress significantly differently.

Although the time is very limited, we also start engineering the TRPC5 to sense different sound parameters. We devised a directed evolution method using selection of mutation library from functional sound sensing CHO cells. It is a iterative approach consists of 1) random mutate putative mechanical sensing domain of the TRPC5 channel proteins, 2) integrate single copy into the identical locus of CHO cells, 3) select CHO cells expressing TRPC5 mutant library with downstream NFAT promoter driven GFP expression.

In addition to be used for engineering synthetic hearing cells for different sound, audiogenetics could also provide us with new synthetic biology tools, alternative to chemogenetics and optogenetics for research, due to its essential advantages, deep tissue penetration, temporal and submillimeter spatial resolution. It could also be used in cell replacement therapy such as modulating the physiology or gene expression of transplanted cells in vivo.

Formula of Feeling Sound

References

- ↑ Oishi, N.; Schacht, J. (June 2011). "Emerging treatments for noise-induced hearing loss". Expert opinion on emerging drugs. 16 (2): 235–45. PMID 21247358.

- ↑ Our team member Fan Jiang has a history of hearing loss in his childhood at age 5, which took the audible world away from him for half a year.

- ↑ Nakagawa, Takayuki (2014). "Strategies for developing novel therapeutics for sensorineural hearing loss". Frontiers in Pharmacology. 5. doi:10.3389/fphar.2014.00206

- ↑ Sun H, Huang A, Cao S. 2011. Current status and prospects of gene therapy for the inner ear. Human gene therapy 22: 1311-22

- ↑ Reefhuis, J., ... & Costa, P. (2003). Risk of bacterial meningitis in children with cochlear implants. New England Journal of Medicine, 349(5), 435-445.

- ↑ Christensen, Adam P and Corey, David P (2007) TRP channels in mechanosensation: direct or indirect activation?.Nature reviews. Neuroscience 8, 510-21